Finance

Algorithmic education – Econlib

Ian LeslieHis work focuses on human behavior. He has appeared on two previous episodes of EconTalk (Ian Leslie on Curiosity And Ian Leslie on Conflicted). In this episode, hosts Russ Roberts and Leslie continue the discussion about human behavior, discussing Leslie’s contention that AI is already changing the way we think. It’s not just the matches that imitate us, but we have begun to imitate the machine in profound ways that change what and how we create.

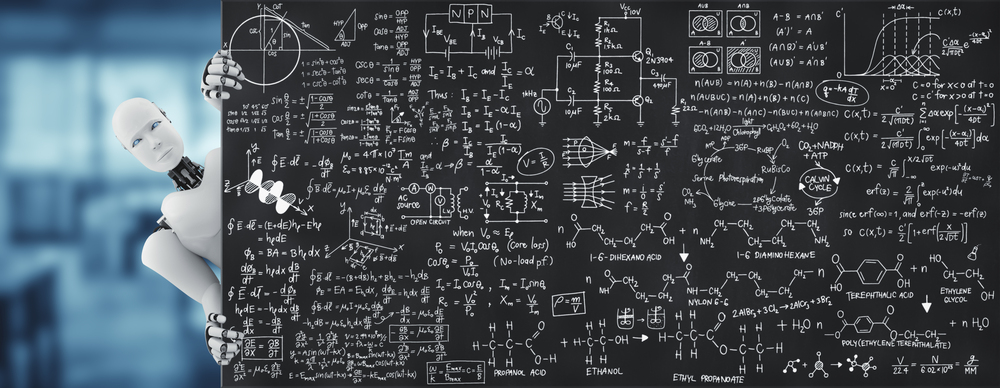

Roberts and Leslie spend some time discussing how students actually learn to write using a very simple algorithm, the five-paragraph essay. As a former teacher, I’m glad I left the classroom before the rise of Chat-GPT and similar tools. Yet some of the cultural and technological forces Leslie demonstrates were evident in student writing and thinking long before the introduction of generative AI technology to the public. We have certainly been living in a world shaped by algorithms for years: social media and search algorithms have shaped our information flow and social circles for years. The ubiquity of autocorrect and the digital organization of information influences the way adults and children learn. The easy isolation that personal devices enable is also changing the way we connect with, process, and share information.

If Ian Leslie’s argument is correct, the algorithmic transfer of knowledge predates even these technologies. As a product of late 20th and early 21st century schools and as a teacher, I tend to agree). As Leslie notes:

…basically we did that taught them – we taught many of them – that good writing means following a set of rules and that an essay should have a five-part structure. So instead of helping them understand the importance of structure and the many ways you can approach structure and the subtleties of that question, we’re now tempted to say, “Five points.” That’s what you want to achieve in an essay. The student says, “Okay, I can follow that rule.” Instead of helping them understand what it means to really nail or at least care about your writing depth and originality and interest, we say: ‘These are the five principles you should follow. Here you can read how long a paragraph should be. Here’s how your sentence should be. Here the prepositions go or don’t go.’

And actually we are programming them. We give them very simple programs, simple algorithms to follow.

And the result is that we often get very boring, rather superficial responses back. So it’s really no wonder that ChatGPT can then produce these essays, as they essentially follow a similar process. That ChatGPT has a huge amount of training data, so it works much faster.

And so we should We should worry about it, but not because it is about to become some kind of super-intelligent consciousness, but because of the way we have trained ourselves to write algorithmic essays.

No wonder modern school design has relied on these “simple programs, simple algorithms.” Quality education at scale is not an easy proposition, and the simplistic five-paragraph vision reliably produces a mediocre but acceptable product. This certainly goes a long way in explaining the mediocrity common to human beings average student essay in favor of common access to generative AI. Now the problem is more immediate: essays actually composed by generative AI. I don’t envy teachers these days who try to teach around this, but the problem existed before the newest, most powerful tool came along. Now it has accelerated.

Naturally, the applications of AI in education extend far beyond the classroom. Most of us use Google or other search engines to quickly look up information or images. Now that much of that content is influenced or created by AI, our perception of reality is filtered by the machine. An odd example: I was scrolling through my Reddit feed and saw several complaints about wedding flower images being generated by AI and posted to sites like Pinterest, which many people use for design and planning inspiration. Why did the poster complain? Because the wildflower bouquets in some very realistic-looking images were physically impossible: the flower types depicted do not have strong enough stems to be used in a wedding bouquet.

- What do you notice in your environment that changes due to AI? Has it changed the way you interact with others professionally or personally? Does this make your life better or worse overall?

- What counts as AI? Autocorrect and autocomplete are much simpler than ChatGPT, but even more ubiquitous in our digital world, but they have the potential to change the words we use when communicating with each other. Do they make our communication better, or just more algorithmic?

- Regardless of the level of technology, some of the learning is algorithmic, repetitive and not particularly creative. Beginning piano students learn scales, math students memorize formulas, and writers and artists learn through imitation. Where does imitation stop and creativity begin? And when should that happen?

- What makes creativity human? What about machine output, even very complex output, that is missing or inauthentic?

Additional links:

Ian Leslie on why curiosity is like a muscle Quercus books

Ian Leslie on why we should keep learning and being curious The Royal Society of the Arts

Nancy Vander Veer holds a BA in Classics from Samford University. She taught high school Latin across the U.S. and held program and fundraising positions at the Paideia Institute. Based in Rome, Italy, she is currently completing a master’s degree in European social and economic history at Philipps-Universität Marburg.