Health

Chatbots make poor multilingual healthcare consultants, research shows

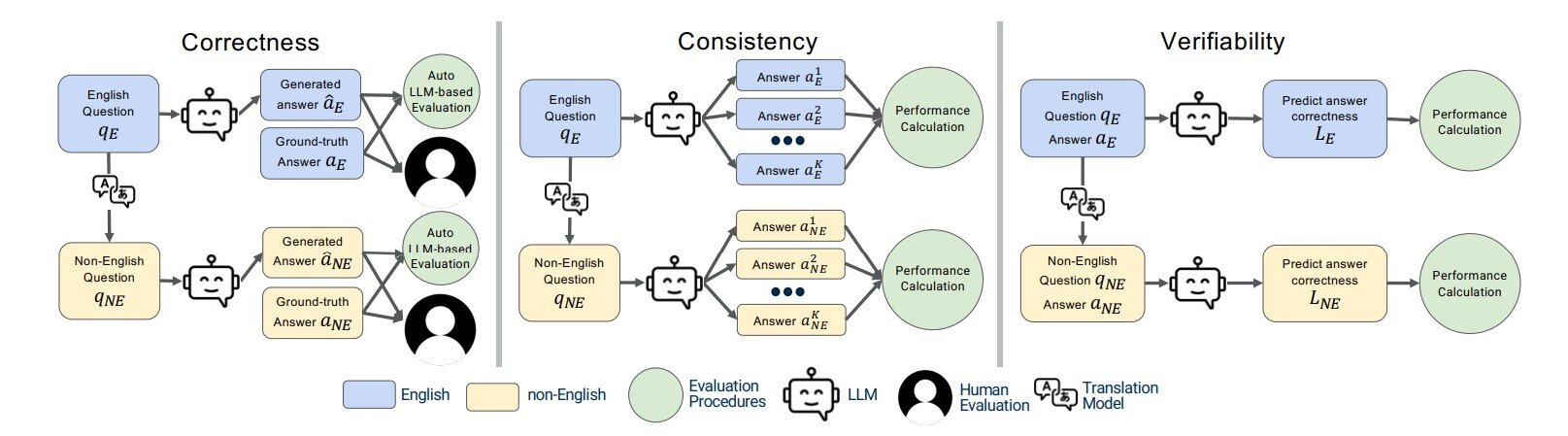

Evaluation pipelines for correctness, consistency and verifiability criteria in the XLingEval framework. Credit: arXiv (2023). DOI: 10.48550/arxiv.2310.13132

Researchers at Georgia Tech say non-English speakers should not rely on chatbots like ChatGPT to provide valuable healthcare advice.

A team of researchers from Georgia Tech’s College of Computing has developed a framework for assessing the capabilities of large language models (LLMs). Ph.D. students Mohit Chandra and Yiqiao (Ahren) Jin are the co-lead authors of the article “Better to Ask in English: Cross-Lingual Evaluation of Large Language Models for Healthcare Queries.” The paper is published on the arXiv preprint server.

Their paper’s findings show a gap between LLMs and their ability to answer health-related questions. Chandra and Jin point out the limitations of LLMs for users and developers, but also emphasize their potential.

Their XLingEval framework warns non-English speakers to use chatbots as an alternative to doctors for advice. However, models can improve by expanding the data pool with multilingual source material, such as their proposed XLingHealth benchmark.

“For users, our research supports what ChatGPT’s website already says: chatbots make a lot of mistakes, so we shouldn’t rely on them for critical decision-making or for information that requires high accuracy,” Jin said.

“Since we have observed these language differences in their performance, LLM developers should focus on improving accuracy, correctness, consistency and reliability in other languages,” Jin said.

Using XLingEval, the researchers found that chatbots are less accurate in Spanish, Chinese and Hindi compared to English. By focusing on correctness, consistency and verifiability, they discovered:

- Correctness dropped by 18% when the same questions were asked in Spanish, Chinese and Hindi.

- Non-English responses were 29% less consistent than their English counterparts.

- Non-English responses were 13% less verifiable overall.

XLingHealth includes question-answer pairs that chatbots can reference, which the group hopes will lead to improvement within LLMs.

The HealthQA dataset uses specialized healthcare articles from the popular healthcare website Patient. It contains 1,134 health-related question-answer pairs as excerpts from original articles. LiveQA is a second dataset containing 246 question-answer pairs, constructed from frequently asked questions (FAQs) platforms associated with the US National Institutes of Health (NIH).

For drug-related questions, the group built a MedicationQA component. This dataset contains 690 questions drawn from anonymous consumer questions submitted to MedlinePlus. The answers come from medical references, such as MedlinePlus and DailyMed.

In their tests, the researchers asked more than 2,000 medical-related questions to ChatGPT-3.5 and MedAlpaca. MedAlpaca is a healthcare question-answer chatbot trained in medical literature. Yet more than 67% of the answers to non-English questions were irrelevant or contradictory.

“We see much worse performance in the case of MedAlpaca than ChatGPT,” Chandra said. “Most of the data for MedAlpaca is in English, so it struggled to answer questions in non-English languages. GPT also struggled, but it performed much better than MedAlpaca because it had some sort of training data in other languages. “

Ph.D. student Gaurav Verma and postdoctoral researcher Yibo Hu co-authored the paper.

Jin and Verma study under Srijan Kumar, an assistant professor in the School of Computational Science and Engineering, and Hu is a postdoc in Kumar’s lab. Chandra is advised by Munmun De Choudhury, associate professor at the School of Interactive Computing.

The team presented their paper at The web conference, which takes place from May 13 to 17 in Singapore. The annual conference focuses on the future direction of the Internet. The group’s presentation is a complementary match given the location of the conference.

English and Chinese are the most common languages in Singapore. The group tested Spanish, Chinese and Hindi because these are the most spoken languages in the world after English. Personal curiosity and background played a role in inspiring the research.

“ChatGPT was very popular when it was launched in 2022, especially among us computer science students who are always exploring new technology,” Jin said. “Non-native English speakers like Mohit and I noticed early on that chatbots underperformed in our native languages.”

More information:

Yiqiao Jin et al., Better to Ask in English: Cross-lingual Evaluation of Large Language Models for Healthcare Questions, arXiv (2023). DOI: 10.48550/arxiv.2310.13132

Quote: Chatbots are poor multilingual healthcare consultants, study results (2024, May 28) retrieved on May 28, 2024 from https://medicalxpress.com/news/2024-05-chatbots-poor-multilingual-health.html

This document is copyrighted. Except for fair dealing purposes for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.