Finance

The problem with AI is the word ‘intelligence’

The problem with AI is the word ‘intelligence’, Like a Financial times headline says: “AI in finance is like moving from typewriters to word processors‘” (June 16, 2024). But, I guess, not much further, despite all the excitement (see “Ray Kurzweil on how AI will transform the physical world,” The economist, June 17, 2024). There are at least doubts about the ‘generative’ form of AI. (IBM defines generative AI (as referring to “deep learning models that can generate high-quality text, images, and other content from the data they are trained on.”)

The conversational and grammatical capabilities of an AI bot like ChatGPT are impressive. This bot writes better and seems to be a better conversationalist than a significant portion of humans. I’m told that he (or she, except the thing isn’t having sex and I’m using the neutral ‘he’ anyway) efficiently performs tasks of object identification and classification and performs simple coding. It is a very advanced program. But he is crucially dependent on his gigantic database, in which he makes countless comparisons with brute electronic force. I have had opportunities to check whether his analytical and artistic capabilities are limited.

Sometimes they are astonishingly limited. Very recently I spent a few hours with the latest version of DALL-E (the artistic side of ChatGPT) to get him to correctly understand the following request:

Generate an image of a strong individual (a woman) walking in the opposite direction of a crowd led by a king.

He just couldn’t understand it. I had to elaborate, rephrase and re-explain many times, as in this modified instruction:

Create an image of a strong and individualistic individual (a woman) walking in the opposite direction of an inconspicuous crowd led by a king. The woman stands in the foreground and walks proudly from west to east. The crowd led by the king is in the background and runs from east to west. They go in opposite directions. The camera is facing south.

(By “close to background” I meant “close to background.” No one is perfect.)

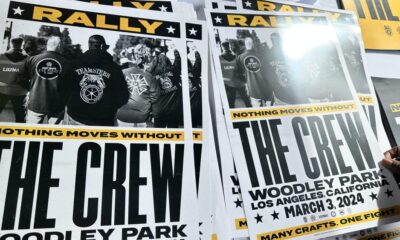

DALL-E was able to repeat my directives when I tested him, but he couldn’t see the glaring errors in his visual representations, as if he didn’t understand. He made many statues of the woman on the one hand and the king and his followers on the other walking in the same direction. The first image below provides an intriguing example of this fundamental misunderstanding. When the bot finally drew a picture where the woman and the king entered opposite directions (shown as the second image below), the king’s followers had disappeared! A toddler who learns to dry recognizes his mistakes better when they are explained to him.

I said about DALL-E ‘as if he couldn’t understand it’, and that is indeed the problem: the machine, basically a piece of code and a big database, just does not to understand. What he does is impressive compared to what computer programs could do until now, but this is not thinking or understanding: intelligence as we know it. It is a very advanced calculation. But ChatGPT doesn’t know that it’s thinking, which means it doesn’t think and can’t understand. He simply repeats patterns he finds in his database. It resembles analog thinking but without thinking. Thinking implies analogies, but analogies do not imply thinking. So it is not surprising that DALL-E did not suspect the possible individualist interpretation of my instruction, which I have not explained: a sovereign individual refused to follow the crowd loyal to the king. A computer program is not an individual and does not understand what it means to be one. As suggested by the above image of this post (also drawn by DALL-E after much insistence and shown below), AI cannot understand Descartes’ statements, and I suspect it never can. Cogito ergo sum (I think therefore I am). And this is not because he cannot find Latin in his databases.

Nowhere in his database could DALL-E find a robot with a cactus on its head. The other Dali, Salvator, could have easily imagined that.

Of course, no one can predict the future and how AI will develop. Caution and humility are required. Advances in computation are likely to produce what we would now consider miracles. But from what we know about thinking and understanding, we can safely conclude that electronic devices, no matter how useful they are, will probably never be used. intelligent. What is missing in “artificial intelligence” is the intelligence.

******************************

DALL-E’s wrong illustration of a simple request from P. Lemieux

Once again a misinterpretation by DALL-E of a simple request

Self-portrait of DALL-E, influenced by your humble blogger