Business

UK implements strict measures against harmful algorithms to protect young people online

Social media giants TikTok and Instagram are in the spotlight as Britain takes decisive action to protect its young people from harmful online content.

Under Ofcom’s new regulations, these platforms have been mandated to rein in their algorithms that spread harmful material to children.

The strict code of practice Ofcom has outlined aims to clean up social media and search engines under the powers of the Online Safety Act. Platforms will now need to implement robust age verification measures to prevent minors from accessing explicit content and material that promotes self-harm, suicide and eating disorders.

Failure to comply with these regulations can result in significant fines, with fines of up to £18 million or 10% of global turnover, along with the possibility of service blocking and criminal prosecution against senior managers.

The Online Safety Act positions Britain as a world leader in the fight against harmful online content and aims to establish the country as ‘the safest place in the world to be online’. This initiative surpasses efforts in the US and joins similar legislation in Australia and Europe.

Messaging services such as WhatsApp and Snapchat are also affected by these regulations, requiring permission to add under-18s to group chats and giving them more control over their online interactions, including the ability to block and mute accounts and disable comments to switch.

Dame Melanie Dawes, CEO of Ofcom, highlighted the importance of these measures, saying: “Our proposed codes place the responsibility for keeping children safer firmly on technology companies. They will have to tame aggressive algorithms that push harmful content to children and introduce age checks to adapt the online experience to age.”

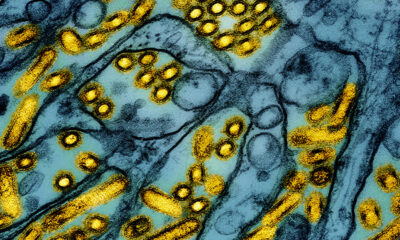

Ofcom’s decision to tackle algorithms follows investigations into their role in spreading dangerous content to children. TikTok in particular has come under scrutiny for its algorithmic feed, which quickly exposes users to potentially harmful material.

To ensure effective age verification, platforms will need to implement strict measures, including facial recognition technology and photo identity verification, to protect children from online risks.

Michelle Donelan, the technology secretary, called these measures crucial and emphasized the need for platforms to implement age checks in the real world and tackle algorithmic errors that contribute to young people’s exposure to harmful content.

Sir Peter Wanless, chief executive of the NSPCC, described the draft code as “a welcome step in the right direction”.

Ian Russell, father of Molly, who took her own life aged 14 after seeing disturbing content on social media, said: “Ofcom’s job was to seize the moment and propose bold and decisive action that will help children can protect against widespread but inherently avoidable harm.